Continuing our anniversary retrospective of content we've created during the past 15 years, this time we offer up, as it originally appeared in May 2004, an article by a renowned industrial safety expert that cautioned about the steadily growing dependency that control systems had on software and, as a result, why it was imperative that we recognize the need to pay a lot more attention to software reliability. Eight years later, the incentive to do so remains vitally important.

Considering all the components used in today's generation of control systems, it's the root cause of hardware failure that gets studied most often. The root cause of software failure, on the other hand, is rarely studied or well understood.

In the field studies that have been conducted, some theories on what causes software failure have emerged, but even those are not widely known or followed by software engineers. Similarly, few practitioners know the rules of software reliability or take the time to understand how to create reliable software. Why? In part because software development tool producers work hard to make control software developers think it's easy to produce reliable software.

No one, however, can ignore the importance of software reliability, and as control systems grow in functionality and complexity, machine and production equipment builders must increasingly depend on software to carry the load.

We'll address these issues here and include examples of software failures, the root causes of those failures, some rules for avoiding those causes and some guidance in evaluating software reliability in control system products.

More complex control

Powerful new tools enable us to develop software-dependent control systems that are increasingly more complex. Software reliability, the ability of this software to perform the expected function when needed, is essential. Yet, how often do we hear, "The network is down," or "My computer froze up--again," or "How long has this operator station been frozen?" Our experience with software is far from perfect.

As industry's dependency on software increases, so does the incentive to develop higher levels of software reliability.

Software failure happens

Consider why software fails the next few examples offer some insight. The console of an industrial machine operator had functioned normally for two years. On one of a newly hired operator's first shifts his console stopped updating the CRT screen and would not respond to commands shortly after an alarm acknowledgment. The unit was powered down and successfully restarted, finding no hardware failures.

With more than 400 units in the field and 8 million operating hours, the manufacturer found it difficult to believe that a significant software fault existed in such a mature product. An extensive testing procedure produced no further failures. A test engineer visited the site and interviewed the new operator. At this interview, the engineer noted, "this guy is very fast on the keyboard." That small observation allowed the problem to be traced and further testing revealed that if an alarm acknowledgment key was struck within 32 msec of the alarm silence key, a software routine would overwrite a critical area of memory and the computer would fail.

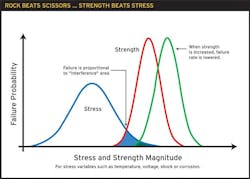

The strength curve indicates the chances of any particular strength value in a collection of products. The area under both curves represents failure conditions. When the product design produces higher strength levels, failure probability decreases.

At another plant an operator requested that a data file be displayed on the terminal and the computer failed. This was not a new request--the same data file had been displayed successfully on the system numerous times before.

The problem was traced to a software module that did not always append a terminating "null zero" to the end of the file character string. On most occasions the file name was stored in memory that had been cleared by zeros written into all locations. Because of this, the operation was always successful and the software fault remained hidden. On the occasion that the dynamic memory allocation algorithm chose memory that had not been cleared, the system failed. This failure occurred only when the software module did not append the zero in combination with a memory allocation in an uncleared area of memory.

Consider a third example in which a computer stopped working after it received a message on its communication network. The message came from an incompatible operating system and, while it used the correct "frame" format, the operating system contained different data formats. Because the computer did not check for a compatible data format, the data bits within the frame were incorrectly interpreted. The events caused the computer to fail in a few seconds.

Many examples of software failure are documented and most of them seem to contain some combination of events considered unlikely, rare or even impossible.

Stress vs. strength

Reliability engineering provides the stress-vs.-strength concept. Failures occur when a stress is greater than a corresponding strength. While this concept comes from mechanical and civil engineering and is most frequently applied to stress as a mechanical force and strength as a structure's physical ability to resist that force, the same concept is applicable to software reliability.

A.C. Brombacher applies this concept to electronic hardware reliability. In his book, "Reliability by Design," Brombacher notes that failures occur when some stress or combination of stressors exceeds the associated strength (susceptibility) of the system (See above figure). Stress, or the combination of stressors, is represented by a curve of the probability of any particular stress value. The strength curve indicates the chances of any particular strength value in a collection of products. The area under both curves represents failure conditions. Within a product, strength is the measure of resistance to stress. When the product design produces higher strength levels, the product is much less likely to fail.

Electronic devices have many potential stressors. Environmental and physical stressors include heat, humidity, chemicals, shock, vibration, electrical surge, electrostatic discharge, radio waves and others. Operational stressors include incorrect commands from an operator, incorrect maintenance procedures, bad calibration, improper grounding, etc.

Now, that makes sense for hardware, but how does this concept apply to software? What are the stressors on a software system? Just as with hardware, software failure occurs when the stress is greater than the strength. The strength of a software system can be measured by the number of software faults or design errors (bugs) present, the testability of the system, the amount of software error checking and online data validation. The stress of a software system is dependent on the combination of inputs, input timing and stored data seen by the CPU. Inputs and the timing of inputs may be a function of other computer systems, operators or both.

Control systems depend on software and this dependency is increasing. There is an important need to evaluate software reliability, but very little is now being done. The stress-vs.-strength concept helps identify the important factors in software reliability. Depending on the required level of software reliability, the following relevant areas and questions need to be considered:

Software Process

• Does a formal process exist for software creation?

• Do all software developers follow this software process?

• Has the process been audited by a third party such as FM or TÜV?

• Does the process conform to applicable standards such as ISO9000-3, DIN V VDE 0801/A1, IEC 61508?

Testability

• What execution variability factors exist in the system?

• Does the system involve multitasking?

• Is there fixed or dynamic memory allocation?

Software Diagnostics

• Does the design include program flow control?

• Is the program flow timed?

• How are the communication messages checked?

• How often is the data integrity checked?

Improve strength and reliability

Higher levels of software reliability result from improving software strength. Most of the efforts in this area have focused on improving the software development process and removing software faults. Because human beings create software and also make mistakes, the design process is never perfect. Companies expend many resources to establish and monitor the software development process. These efforts aim to increase strength by reducing the number of faults in the software. This approach can be very successful, depending on implementation and attempts are being made to audit the effectiveness of the software process. For example, the ISO9000-3 standard establishes required practices.

The Software Engineering Institute has created a five-level software maturity model, wherein Level 5 represents the best process. In addition, companies in regulated industries such as pharmaceuticals audit software vendors to insure compliance to internal software reliability standards. By reducing the number of faults in software and thereby increasing its strength, these efforts have begun to improve software reliability.

Hooked on software

Control systems depend on software and this dependecy is increasing. There is an important need to evaluate software reliability, but very little is now being done. The stress-vs.-strength concept helps identify the important factors in software reliability. Depending on the required level of software reliability, the following relevant areas and questions need to be considered:

Software Process

- Does a formal process exist for software creation?

- Do all software developers follow this software process?

- Has the process been audited by a third party such as FM or TV?

- Does the process conform to applicable standards such as ISO 9000-3, VDE0801/A1, IEC61508?

Testability

- What execution variability factors exist in the system?

- Does the system involve multitasking?

- Is there fixed or dynamic memory allocation?

Software Diagnostics

- Does the design include program flow control?

- Is the program flow timed?

- How are the communication messages checked?

- How often is the data integrity checked?

Another factor that influences the number of faults in a software system is its testability. Software testing may or may not be effective, depending on the variability of execution. A test program cannot be complete, for example, when software executes differently each time it is loaded. In this situation, the number of test cases explodes to virtual infinity.

Execution variability is increased with dynamic memory allocation, number of CPU interrupts, number of tasks in a multitasking environment, etc. All of these factors need to be considered when the potential reliability of software is evaluated. Increasing the amount of variability such as multitasking or dynamic memory allocation decreases testability and indicates lower strength and reliability.

Software strength also increases based on several important factors referred to as software diagnostics, in primarily two ways. First, software diagnostics can reject potentially fatal data. Second, software diagnostics can do online verification of proper software execution. In fact, international standards for safety critical software (DIN V VDE 0801, IEC 61508) specify software diagnostic techniques such as program flow control and plausibility assertions. These diagnostics are required in safety critical software approved by recognized third parties such as FM in the U.S. and TV in Germany.

One software diagnostic technique is program flow control. In this procedure, each software component that must execute in sequence writes an indicator to memory. Subsequently, each software component verifies that necessary predecessors have done their job. When a given sequence finishes, verification is done to confirm that all the necessary software components have run in the proper sequence. When the operations are time critical, the indicators can include time stamps. The times are checked to verify that maximums have not been exceeded.

Plausibility assertions verification is another software diagnostic. While program flow control verifies that software components have executed properly in sequence and timing, plausibility assertions check inputs and stored data. The proper format and content of communication messages are checked before commands are executed. Data is checked to verify it is within a reasonable range. Pointers to memory arrays also must be within a valid range for the particular array.

All these techniques are considered online software testing. When a software diagnostic finds something not within the predetermined valid range, there usually is an associated software fault. A software diagnostic reporting system records program execution data and fault data. This provides the means for software developers to identify and repair software faults rapidly. This increases the effectiveness of testing before a product is released. Assuming the data is effectively stored, these methods also allow more effective resolution of field failures when they occur.

Leaders relevant to this article: