As he was in 2003, Dr. William Goble is a partner with Exida, providing safety training and consulting services for users of industrial controls and automation. With more than 30 years of experience, he is a recognized expert in programmable electronic systems analysis, safety and high-availability automation systems, and market analysis.

A safety PLC must meet the requirements of a set of rigorous international standards that cover the design, design methods and testing of software and hardware. Third-party experts enforce the rigor when the products go through the certification process.

While it's possible for a PLC manufacturer to avoid most of the software requirements by asking the user to do extensive testing of the application software, this is rare and most safety PLCs have their software certified for safety. This relieves the user of a great expense throughout the product life.

SEE ALSO: Safety Relay or Safety-Rated PLC?

Safety-certified software must follow a purposeful and unambiguous software-development process. Most of the world's experts agree that this results in software that is more reliable and predictable. It's helpful and reassuring if potential specifiers of programmable safety devices understand that process.

High-Quality Principles Are a Must

The quality principles developed by Juran and Deming are well known in factory operations throughout the world. These principles require that a process be established and followed. While following a process may seem obvious, it's easy to take software quality for granted and shortcut the process after the initial design is completed. This seems to be part of the "software culture" at times, especially when a project gets behind schedule.

As we discussed in Part I last month, the safety-critical software development process emphasizes the Vmodel, which starts with product requirements.

Requirements reviews determine that all safety-relevant requirements are documented and product validation tests are developed along with product requirements.

Test planning can and should be done while requirements are being finalized. A test plan review provides a good crosscheck of the testability of any given requirement — a test of requirement reasonability. The test plan review might uncover missing requirements before too much design has occurred.

The requirements are considered the foundation of the whole project, and as such should be treated quite seriously by the PLC manufacturer. Each requirement must state the safety function in quantifiable terms. For example: "The analog channel shall detect any faults that cause a value greater than ±2% of span within one second."

An important aspect of the process is the traceability of requirements to tests. While this step makes auditing easier, it also helps the developers identify missing and duplicated requirements. The test effort must show correctness and completeness of fulfilling the product requirements. Correctness means that the software operation performs exactly as it's intended, fulfills the matched requirement, and takes appropriate action for fault detection. Completeness means that all requirements have been met.

Manage the Changes

It's essential for a safety PLC software development team to maintain control over changing requirements. Documents should be properly identified and include revision history. Formal reviews should be held with meeting minutes that include issue resolution and agreed-upon action items. If decisions are made that affect requirements, the team must go back through the process and judge impact to other parts of the product.

The project manager must review and assure completion of all action items. More importantly, the team must translate informal resolutions of design issues to the design documents. Not every design decision is made by a formal review; many decisions can and should be made at the level appropriate for implementing the decision. When decisions are made in this manner, the appropriate design documents should be updated. The document trail serves to inform all project stakeholders of the changes.

Safety PLC Software Techniques

Failures in software do not occur randomly. Software does not wear out. All software failures are designed into the system.

When that certain combination of inputs, timing or data presents the right conditions to the system, it will fail every time. For this reason, failures in software systems are known as systematic failures. To make certain the software is performing as intended, the software must check itself to make sure it has done what it thinks must be done.

Program flow checking ensures essential functions execute in the correct sequence. At key points in the program, a "flag" is set, preferably with a time stamp. At the end of each program scan, the flags are checked.

Software diagnostics are programmed into embedded code. One of the most effective software diagnostics is "flow control." Program flow checking makes sure essential functions execute in the correct sequence. At key points in the program, a "flag" is set, preferably with a time stamp (Figure 1). At the end of each program scan, the flags are checked. All flags must be set in the correct sequence. If time stamps are also used, the time difference between flag settings can be compared with reference values for further error detection.

Another software diagnostic is called "reasonableness checking." When the results of computations should always be within known limits, the computed outputs can be tested to see if they exceed those limits. In this way systematic faults can be detected before an erroneous system action occurs.

Aside from computational results, many states and values are derived and stored within software control. When values are mutually exclusive, additional reasonableness checks on this data can flag faults before erroneous states occur. The same mechanism can be used for message schemes between software-based systems.

The data used in a safety PLC must be protected from corruption. Critical data is identified by analyzing the execution flow of critical software functions. Often done with dataflow diagrams, this analysis identifies the software processes that perform critical functions found in the safety requirements. These functions include both the diagnostics and the execution of the user safety program.

The data associated with these software processes is termed critical data. Critical data must be stored so it can't become corrupted in an undetected manner by systematic software fault or by hardware failure.

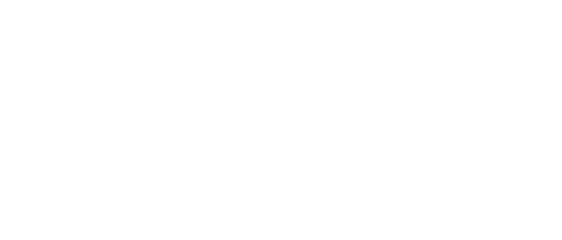

Figure 2 shows a dataflow diagram with a chain of processes and a reverse calculation check on critical data. Process 8 provides a crosscheck on Processes 1-3 to detect an error in the normal process chain. While Processes 1-3 may provide a high-accuracy result based on product specifications, Process 8 provides a comparison of that result within the product safety accuracy, which is usually less accurate but will detect an erroneous software condition.

A dataflow diagram with a chain of processes and a reverse calculation checks on critical data. Process 8 provides a crosscheck on Processes 1-3 to look for any errors in the normal process chain.

Firewalls Around Critical Functions

When safety-critical functions must be combined with non-safety-critical functions, the design must include sufficient safeguards for non-interference. This means that any non-safety operations, such as data acquisition from a safety system to a plant manager console screen, can't hamper or inhibit in any way the safe operation or fault-detection mechanisms of the safety system.

If any non-safety functions have the possibility of writing data to a safety system, the writes must be under controlled circumstances in an allowed configuration mode. The system design must reject any unexpected changes to the system.

Software Complexity

Safety PLC standards demand special techniques to reduce software complexity. Developers must carefully examine operating systems for task interaction. Real-time interaction, such as multitasking and interrupts, are avoided. This is because many of the most insidious software faults have been traced to unanticipated interaction between software programs and common resources used by multiple software tasks.

When multitasking is used, real-time interaction of tasks requires extensive review and testing. It's especially important to avoid the use of common resources such as I/O registers and memory by asynchronous tasks in a multitasking environment.

Extra software testing techniques are required for safety PLCs during software development. The findings and assumptions of the criticality analysis must be proven.

A series of "software fault injection" tests must be run to verify data integrity checking. The programs are deliberately corrupted during testing to ensure predictable, safe response of the software. Hardware emulators, specific for the microprocessor, are often used to set break points and alter program data; then the program is allowed to continue to see if the fault was detected.

An alternative test method uses custom software built into the program. This requires a monitor program to accept user input about special test codes. These test codes invoke fault injection functions that are time dependent and not easily performed by an emulator. The testing must be fully documented so third-party inspectors can understand the operation. While this activity is not justified in most software development, this is exactly how the most harmful and covert software design faults are uncovered.

Fault and Change Tracking

When suspected problems are found in the software design or code, they must be recorded and reviewed using a formal system¹. Not every reported problem is a real defect, and these should be discarded with rationale for the determination. Not every problem found is reliability or safety related.

When a problem is investigated and deemed important enough to fix, the development team should perform an impact analysis of the suspected defect. The analysis should include accurate problem description, effect of the problem on critical functions, description of the proposed solution, and effect of the proposed solution on safety functions.

A database should contain all necessary details of the activity related to problem identification and tracking. Items to clearly identify in this database are author, date and product/version where problem was found; problem description, with any particular test setup details or circumstances; implementer log that includes change notes and files affected; authorization notes for accepting the change; time estimates and actual time used; and test data to see that the fix was correct.

Software Process Improvement

Problems discovered in the software development process that involve safety-critical functions must be treated with great scrutiny. The step in the development process where the problem occurred should be identified².

Some problems can be traced to design or implementation, but the greater number of problems is often traced to missing or inadequately defined requirements. When the latter case occurs, the lifecycle model loop must be reviewed to determine where to start implementation of the fix and any related documents that need to change.

It's also useful to identify the error-detection step in the development process that should have found the problem. If the problem was discovered at a later step in the process, then improve the process for future developments². While it sometimes seems like a problem is isolated to a specific area of software, often the problem is far-reaching.

The design documentation referenced by the problem area must be reviewed for non-obvious interface effects. For example, there can be subtle timing elements that affect message schemes that are safety-critical, or an uncommon but likely mode of operation may inhibit a critical diagnostic under specific conditions.

References:

1. Mavis, S. A., "An Organized Way of Tracking Faults in the Development Process," Proceedings of the International Symposium of Engineered Software Systems (ISESS), Malvern, Pa., London, World Scientific, 1993.

2. Bukowski, J. V., and Goble, W. M., "Software—Reliability Feedback: A Physics of Failure Approach," Proceedings of the Reliability and Maintainability Symposium, New York, IEEE, 1992.

About the Author

William Goble

Exida

Leaders relevant to this article: