Purdue University researchers pioneer organic eyes, a biomimetic approach to computer vision systems that makes machinery more energy-efficient

Conventional silicon architecture has taken computer vision a long way, but Purdue University researchers are developing an alternative path, taking a cue from nature, that they say is the foundation of an artificial retina. Like our own visual system, the device is geared to sense change and designed to be more efficient.

“Our long-term goal is to use biomimicry to tackle the challenge of dynamic imaging with less data processing,” explains Jianguo Mei, the Richard and Judith Wien Professor of Chemistry in Purdue’s College of Science. “By mimicking our retina in terms of light perception, our system can be potentially much less data-intensive, though there is a long way ahead to integrate hardware with software to make it become a reality.”

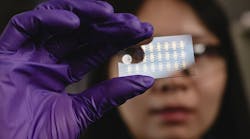

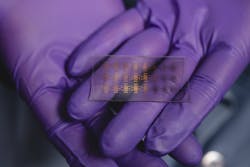

Mei and his team drew their inspiration from light perception in retinal cells. As in nature, light triggers an electrochemical reaction in the prototype device they have built (Figure 1). The reaction strengthens steadily and incrementally with repeated exposure to light and dissipates slowly when light is withdrawn, creating what is effectively a memory of the light information the device received. That memory could potentially be used to reduce the amount of data that must be processed to understand a moving scene.

“This invention is still very early in its development,” explains Mei. In a paper published in Nature Photonics, the Purdue team demonstrated its conceived concept of artificial retina, called “organic eyes” and proved the benefits of the new device. “Our long-term vision is to make organic eyes that can mimic human or animal visual systems, featuring ion and electron transport and enabling optical sensing, memorization and recognition,” Mei says.

“To make it suitable for industrial applications, there is a long way to go to optimize our devices and integrate software and hardware,” explains Mei. “If we can make it happen in the next few years, it is expected this technology will benefit many industries, including autonomous driving.”

The team calls the device an organic electrochemical photonic synapse and says it more closely mimics how the human visual system works and has greater potential as the foundation of a device for human-machine interfaces (HMIs).

“I can definitely foresee this technology becoming a part of vision systems,” says Dale Tutt, vice president, industry strategy, at Siemens Digital Industries Software (Figure 2). “In the studies they are doing at Purdue University, researchers are able to mimic the results of the human eye in a way that requires less data requirements. That is intriguing to me.”

Applications in the works

When you think about the various applications that have vision systems, the promise of collecting information that will let the machine make the decision faster with less data and fewer data requirements also means there will be a decrease in power requirements, making that machine more energy-efficient, explains Siemens’ Tutt. “I am interested to see where this evolution of computerized vision systems will go,” he says. “As this technology is modeled and a digital twin of the eye is created to generate a computer vision system, I think it could be implemented within the next five to 10 years. I do not think we are talking decades.”

The design may also be useful for neuromorphic computing applications that operate on principles similar to the architecture of the human brain, says Ke Chen, a graduate student in Mei’s lab and lead author of the paper that tested the device on facial recognition (Figure 3).

“In a normal computer vision system, you create a signal, and then you have to transfer the data from memory to processing and back to memory; it takes a lot of time and energy to do that,” says Chen. “Our device has integrated functions of light perception, light-to-electric-signal transformation and on-site memory and data processing.”

In industrial systems or machinery, there is typically a sensor with a computer behind it, and the computer is doing the calculations and work, explains Tutt. “It appears that sensor could easily be replaced with more efficient optical sensors, which would lead to different algorithms that require less computing power to do the calculations and analysis of what the vision sensor is capturing,” he says. “In theory, many of the sensors could be replaced with this newer or evolutionary technology. I might even go so far as to say it has the potential be revolutionary technology, especially if we can combine it with artificial intelligence (AI) to truly mimic not only the vision powers of a human, but also the ability to problem-solve and make decisions the way a human does.”

Mei’s vision system, like human vision, is relatively low-resolution but is well-suited to sensing movement. Human eyes have a resolution in the neighborhood of 15 microns. The prototype device, which houses 18,000 transistors on a 10-centimeter square chip, has a resolution of a few hundred microns, and Mei says the technology could be improved by lowering resolution to about 10 microns.

“When we look at industrial vision applications, the first implementation that comes to mind is robotics in a factory or other similar situations that currently leverage computer vision systems,” says Tutt. “This technology could lead to a more efficient operation, while still delivering the same or possibly even better results than those being achieved with some of today’s more advanced systems. Beyond that, I think there are many other applications for optical sensors: in autonomous cars, tractors, aircraft or any other place where decision-making relies on accurate vision. Industrial vision applications could be an effective and efficient alternative to current computer vision systems, providing more accuracy while requiring significantly less energy and computing power.”

Worker shortage woes

This technology also could help address some of the challenges companies face due to an inability to hire enough workers, notes Siemens’ Tutt. “Industrial vision applications could further enable the automation of jobs that cannot be filled whether due to a shortage of skilled workers or because they are jobs that no one wants, such as cases where the tasks need to be performed in hazardous environments,” he says.

“Our eye and brain aren’t as high-resolution as silicon computing, but the way we process the data makes our eye better than most of the imaging systems we have right now when it comes to dealing with data,” says Purdue’s Mei. “Computer vision systems deal with a humongous amount of data because the digital camera doesn’t differentiate between what is static and what is dynamic; it just captures everything.”

Rather than going straight from light to an electrical signal, Mei and his team first convert light to a flow of charged atoms called ions, a mechanism similar to that which retinal cells use to transmit light inputs to the brain. They do this with a small square of a light-sensitive polymer embedded in an electrolyte gel. Light hitting a spot on the polymer square attracts positively charged ions in the gel to the spot and repels negatively charged ions, creating a charge imbalance in the gel.

Repeated exposure to light increases the charge imbalance in the gel, a feature which can be used to differentiate between the consistent light of a static scene and the dynamic light of a changing scene. When the light is removed, the ions remain in their charged configuration for a short period of time in what can be considered a temporary memory of light, gradually returning to a neutral configuration.

The positively charged spot serves as the gate on a transistor, allowing a small electric current to flow between a source and a drain in the presence of light. Much like the conventional photodetector, the electric current is indicative of light intensity and wavelength, and it is passed to a computer for image recognition. But, while the output of an electric current is the same, it is the intermediate step of converting light to the electrochemical signal that creates motion-sensing and memory capabilities.

Mei’s electrochemical transistor is one of an emerging class of optoelectronic devices that seek to integrate light perception and memory, but the charge imbalance increases in smooth and steady increments with repeated exposure to light and decays slowly.

Mother Nature

“It seems like it would be reasonably easy to integrate this technology into systems, replacing one vision technology for a more effective and efficient one, but that makes one wonder: Where else could this lead in improving vision systems and beyond? What else can we learn from Mother Nature?” asks Tutt.

“For example, sticking with vision for a moment, how could we improve night vision?” wonders Tutt. “Maybe there is something that can be done to recreate the vision of an owl. Night vision is critical to their survival. Currently, we leverage radar, but owls seemingly just have massive eyeballs. Could we replicate that technology? And, while we are on the subject of birds, let’s talk about flight, or even strength with their hollow bone structure. What other natural systems can be mimicked to make manufacturing more innovative, efficient and sustainable?”

Machine builders or system integrators could also find the sustainability benefits of this technology to be interesting, suggests Tutt. “The human eye burns a lot less energy than a sensor does, so if we begin replacing existing sensors with more capable sensors that use way less power to operate, the efficiency results could be massive and result in operations that are much more sustainable and cost-efficient,” he says. “After all, energy costs money, so using less not only helps the environment, but also the bottom line.”

At Purdue, Mei and Chen are joined in the research by Hang Hu, Inho Song, Won-June Lee and Ashkan Abtahi, as well as researchers at the University of Texas at San Antonio (Figure 4). “Artificial Retina Based on Photon-Modulated Electrochemical Doping” was published in Nature Photonics with the support of Ambilight.

Mei disclosed his innovation to the Purdue Innovates Office of Technology Commercialization, which has applied for patents on the intellectual property.